First steps

In this chapter, you're going to learn about...- Finding information that can help you confirm whether and how you should be using a technology.

- How software package managers can be helpful for installing tools and when they're best avoided.

- How to poke around under the hood to better understand how a technology is built and how, when necessary, it can be fixed.

- How to devise an appropriate plan of attack as you finally prepare to begin your learning.

Then you can blindly and recklessly rush in.

So how do you scope out a technology's larger ecosystem? Keep reading.

The big picture

When starting from scratch I'll almost always run a web search using nothing but the technology's name. More often than not, the first two links I'm shown will be the tech's official website and its Wikipedia page.Both will probably contain helpful information, but my first stop will usually be Wikipedia. That's because I'm confident that, because of Wikipedia's predictable page format, I'll quickly get to the technology's core function and the product category within which it lives.

To illustrate, here's what the first sentence of Wikipedia's article on PHP told me:

PHP is a server-side scripting language designed for web development but also used as a general-purpose programming language.On the other hand, here's what the world's biggest encyclopedia had to say about Python:

Python is an interpreted high-level programming language for general-purpose programming.Both introductions quickly communicate their products' core functions: "server-side scripting..." or "interpreted high-level..." respectively. The inline links point readers to separate standalone pages devoted to explaining topics like "server-side scripting" and "interpreted language." Reading through those pages will expose you to other tools built to meet similar needs, and to greater insights into the value and purpose of such tools.

What makes that information so important? Because you want to be sure that you're making the right choice and that your learning plan is a good fit for the technology.

This kind of high-level context can be really helpful keeping you focused as you become more familiar with the subject. But spending a few more minutes browsing through the rest of the Wikipedia page and the official documentation site can often add important structural knowledge.

For instance, is the technology integrated with your operating system by default? Is the current stable release going to do everything you need or should you install a beta version? Are there any environment dependencies that might conflict with your current system setup? Is the technology extensible to allow for future growth or customization?

Once you're plugged into the technology, it's time to think about how to plug the technology into your system.

Software installation

Installing programming language environments or administration tools can get tricky. Even if you've got a good software repository available, there are still loads of choices you'll face. So, if you don't mind, I'll take a couple of minutes of your life to talk about some common options, approaches, and considerations. One day you may thank me.First of all, if you've never had the pleasure of using a Linux repository, I should describe how they work.

Software package managers

Most Linux distributions ship with software package managers, like APT for the Debian/Ubuntu family and RPM/YUM for Fedora/CentOS. The managers oversee the installation and administration of software from curated online repositories using a regularly updated index that mirrors the state of the remote repo.Practically, this means Linux users can request a package using a single, short command like

sudo apt install apache2 and the manager will query the online repo, assess necessary dependencies and their current state, download all needed packages, and install and configure whatever files need installing and configuring.But the real beauty of a package manager is how it actively maintains the overall system stability. For instance, when a patched version of the software is added to an upstream repository, the installed version on your computer will be automatically updated. Similarly, when you decide to uninstall a package you're no longer using, the package manager will invisibly survey the system state and remove only those dependencies that are not being used by any other package.

The concept of curated software repositories and integrated package managers has been so successful that it's been imitated for other operating systems. MacOS users will be familiar with HomeBrew and, more recently, OneGet was launched to manage software on Windows 10. You're a bit late to the party, but we Linux folk are nevertheless glad you're finally here.

Considering the reliability and security benefits of using managed software, you'd normally avoid getting your software any other way. But that won't always be true.

For one thing, not all software can be found on official repositories (especially non-Linux repos). There will also be times when the release version that's available in the repos is a bit out of date - something that I've recently encountered with PHP. Sometimes your project simply requires customization that isn't possible with official versions.

Alternate sources

I don't think I need to tell Windows users how to download and click on packages to install them. Explaining how to install Git on your machine and locate and pull package repos is probably also obvious for anyone reading this book. On the other hand, describing how to manually compile from source code usingmake could get a bit too detailed for this discussion.So what's this little section really all about? It's about making sure you're aware of all the possibilities, including language-specific package managers like

npm (for Node JavaScript), pip (for Python), and RubyGems (for Ruby, obviously).Now you know.

Environment orientation

If you've had any experience writing code then you'll know how complicated it can be to set up your programming and compile environment. Even something as simple as getting a text editor or Integrated Development Environment (IDE) just the way you want it can be a challenge.Since choosing a set of tools can be a personal and project-specific process, my general advice wouldn't be very helpful. But before digging too deeply into a project, it's worth doing some reconnaissance. whether you're inclined to select Eclipse, Visual Studio Code, Atom, or Vim, make sure you understand how it will work within the rest of your stack and where your code will live.

Understanding where your important stuff gets to: now that's something worthy of some serious discussion. But let me first take a step back and talk about GUI desktop programs.

Full disclosure: I'm a Linux admin by trade and, as a result, I've never met a mouse that didn't make me nervous. The command line shell is where I do most of my work and I would never give up its power and efficiency. In fact, all three (Pbook, Ebook, and HTML) editions of this book were written in a plain text editor and typeset entirely from the command line using Pandoc and LaTeX.

But despite my obviously irrational obsessions, I will ask you to trust me when I say that GUIs have their weaknesses. Software built on graphic interfaces tends to shield critical program files from your view. That can sometimes be convenient - especially for less experienced users.

But when the business of the software you're using is development or administration, then the lack of visibility can be a problem. Sometimes you just need to work directly at the file system level.

With that in mind, it can be useful to devote a few minutes to looking around while you're unpacking a new tool. Using the product documentation and your OS search tools, see if you can find where the tool's configuration, data, and binary executable files are parked.

To get you started, here's what you might find in Linux. By convention, Linux applications usually keep their configuration files within the

/etc/ directory hierarchy, program data files within /var/, and binary executables in /usr/. That's the general plan. Expect surprises.The "Hello World" test run

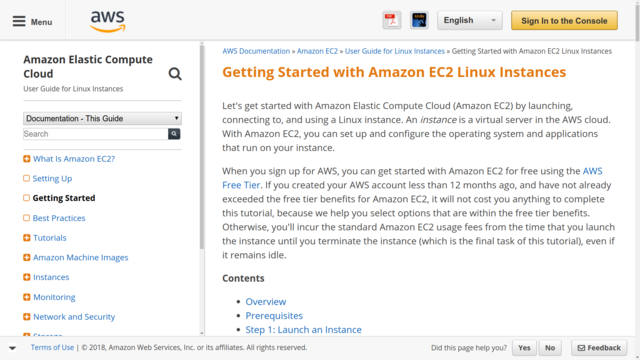

Once you've made your choice and installed everything that needs installing, there's only one more step before you can dive into your first serious project: confirm everything's working by running some kind of "Hello World" task.Many technologies - like AWS's EC2 service illustrated in figure 4.1 come with built-in sample projects and you should be able to find quick starter tutorials online for those that don't. But it's worth asking whether those sample projects can perhaps have more - or perhaps less - to teach us than we might initially think.

An AWS documentation page containing advice for getting started with their EC2 virtual server service

Let me explain. First the bad news. I've come across a small number of elaborate and official "Hello World" demos that successfully make amazing things happen with just a couple of clicks. But, because everything is so completely abstracted away, they actually teach nothing useful about the technology.

Sometimes the only way to really understand something is to walk yourself through the process the slow and manual way. So if a demo leaves you just as confused as you were before you started, don't take it personally: it might just have been a poorly designed demo. If you want to move ahead with your plans, you may have to take matters into your own hands.

Now for the good news. In many cases, a home-made variation of the "Quick Start" demo can get you most of the way to your goal. Sometimes quickly scanning a getting-started guide for just the key details you're missing can be enough to let you fire up a virtual environment, pull the trigger, and see what happens. Didn't work? Big deal: note the results and try again.

The point is that you don't always need to be carefully walked step-by-step through the process. Sometimes being a bit aggressive can save you loads of time and energy. And the fact that you're working with a disposable virtual machine means that mistakes carry little or no risk.

Of course "scan-and-run" may not be a great idea for full-stack, multi-tier environments like AWS. There, starting at the beginning and working sequentially through a planned curriculum can help you avoid missing critical details — like the way Amazon's billing or security work. Trust me: if you don’t like the idea of surprise four-digit monthly service charges or compromised infrastructure, then you don’t want to skip the billing and security basics.

So take a moment and think about the scope of the technology you're trying to learn. You could gain important insights into how to best approach the problem.

Case study

As he gets closer to moving his DevOps infrastructure to live production, Kevin realizes he's going to have to focus some attention on security. Since his servers are running Ubuntu Linux, a bit of research tells him that there will be three main firewall alternatives: Ubuntu's own Uncomplicated Firewall (UFW), Iptables, and Shorewall. All, by happy coincidence, are discussed in depth in chapters 9 and 10 of my Linux in Action. Honestly, I'm not sure why Kevin hasn't already gone out and purchased his own copy.But he hasn't. Instead, he carefully crafted some smart internet search strings that led him to quick-start tutorials for each tool. One at a time, he visually scanned through the guides, copying the key commands he thought would work for his needs. He then launched VMs with his company's applications running, installed and configured a firewall technology and, from a separate computer, tested accessibility, confirming that the right requests were allowed through and the wrong requests were refused.

In the end Kevin decided that Shorewall involved a bit too much of a learning curve, and Iptables seemed a bit too intimidating. UFW hit the mark perfectly. Kevin realized that if his needs had been a bit more complex, then he would have had no choice but to commit to diving deeper in to a more complex or frightening platform. But right now, he's got everything under control.